‘They’ll take our jobs’, ‘they’ll transform our lives’, the ability of robots is perhaps never ending, both feared and admired.

But a couple of decades ago, one man became the first human to be killed by robot.

On 25 January, 1979, Robert Williams was asked to scale a massive shelving unit in a Ford Motor Company casting plant in Flat Rock, Michigan.

The factory worker needed to manually count some parts.

Advert

The five-story machine used to retrieve the castings was giving false readings and the 25-year-old needed to manually go up there to find the actual amount.

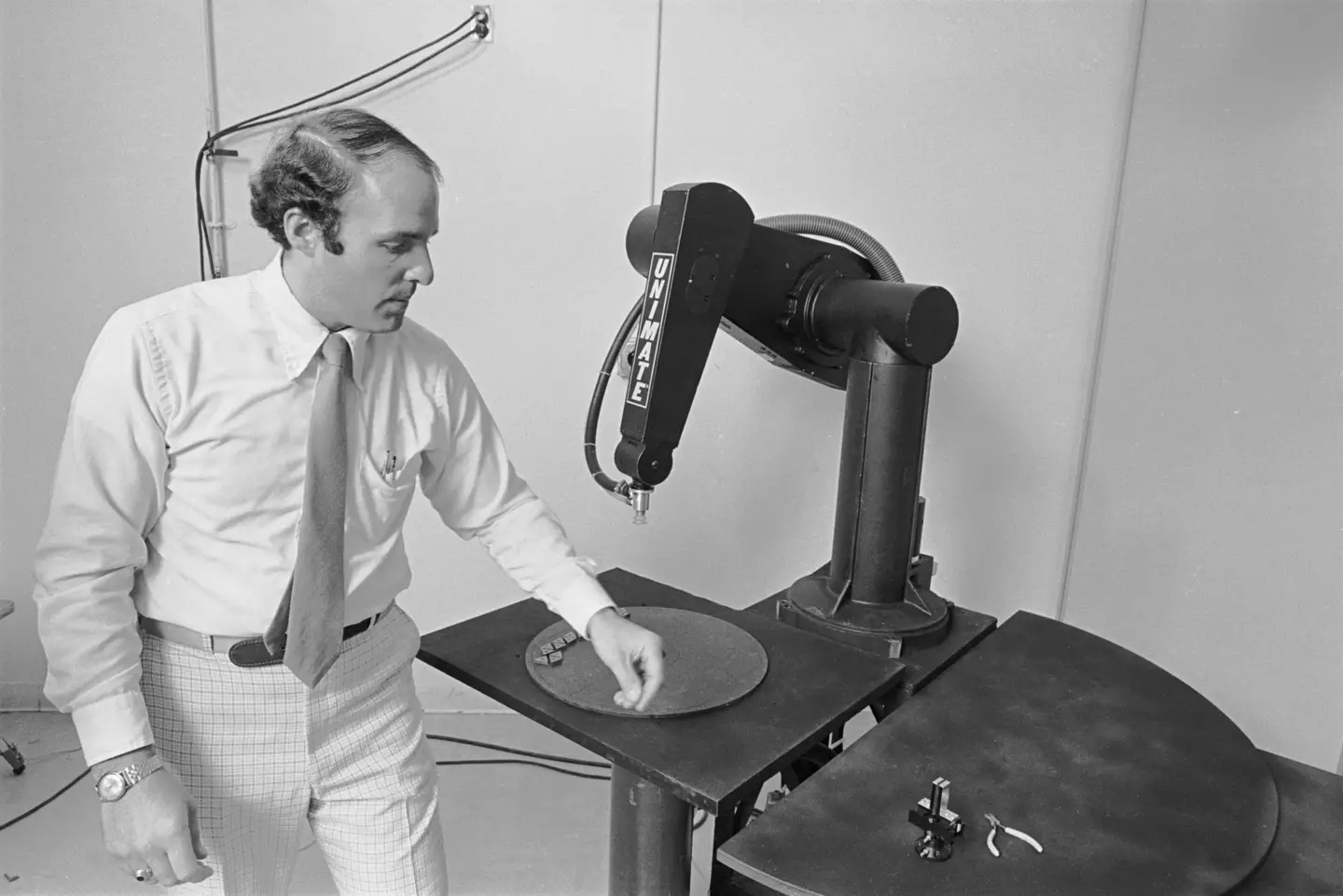

But a robot arm was also tasked with retrieving these parts. The arm of a 1-ton production-line robot.

And while Williams had climbed up there, the robot also began to slowly run.

Williams was struck in the head by the robots’ arm and killed instantly.

Advert

As they do, the robot just continued working and the young man lay there dead for 30 minutes before co-workers found his body, according to Knight-Ridder reporting.

This day was the first time a human had been killed by a robot in history.

Williams’ family sued the robot’s manufactures, Litton Industries, and won a $10 million (£7.8m) lawsuit for his wrongful death.

The death was of course completely unintentional - the robot hadn’t gone about seeking to kill the man, it was just completing its regular job.

A jury agreed there wasn’t enough care into the design considering this and the court concluded there simply hadn’t been enough safety measures in place to prevent this kind of tragedy from happening.

Advert

There were no alarms to alert Williams to the approaching robot arm, and technology in those days couldn’t alter how the robot responded to a human’s presence.

Since Williams’ death back in 1979, there have been other deaths relating to robots. Over two years later, Kenji Urada in Japan was accidentally pushed to his death by a robot arm which, again, failed to sense him near.

None of the deaths that have occurred are due to the will of the robot, they’re simply accidental. But thanks to movies and sci-fi tales such as in the Terminator, many think that AI could eventually develop this will.

Simon Whiteson, a Professor of Computer Science at the University of Oxford has called this the ‘anthropomorphic fallacy’.

Advert

His definition is: “The assumption that a system with human-like intelligence must also have human-like desires, e.g., to survive, be free, have dignity, etc.”

But he says: “There's absolutely no reason why this would be the case, as such a system will only have whatever desires we give it.”

So basically, unless someone actually designs a robot with the desire to kill us, they won’t kill us.

Topics: History, Science, Technology